Human Error: How To (Accurately) Identify & Address It Using Performance Models

By Joanna Gallant, owner/president, Joanna Gallant Training Associates, LLC

After giving a recent course on reacting to human error, one of the attendees sent me a variety of questions about how to apply the human performance models we discussed in FDA-regulated environments. Answering the questions was a good exercise in melding the two sets of concepts, and became something worth sharing more widely — in the form of a two-part, Q&A-style article. Part 1 provided regulatory context, addressed confidentiality concerns, and walked through an application based on an actual FDA 483. This article explains how to accurately identify human errors, determine when a deviation or nonconformance requires CAPA, and get started using human performance improvement tools and processes in your organization.

Can something truly be a human error? And can we really say in a deviation or nonconformance (NC) that a well-trained employee had a “slip” or a “lapse”?

If you can first justify that the situation is not a system or process failure, why couldn’t it be a human error? Human error is a legitimate thing. Humans do have error rates — they’re just not as frequent as the typical number of “human error” root causes identified in deviations/NCs would lead us to believe.

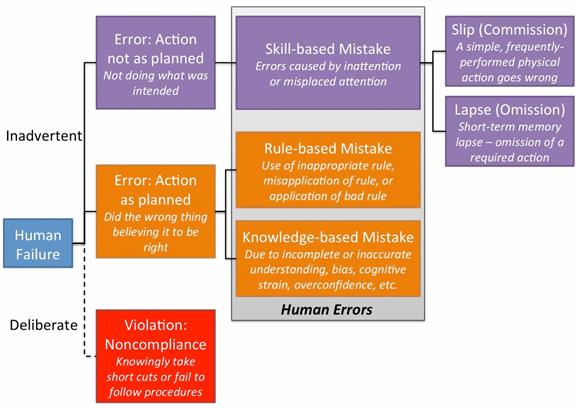

That being said, we should understand what human error really is, so that we can accurately determine when and where it may occur. This is where the Skills, Rules Knowledge (SRK) / Generic Error Modeling System (GEMS) is helpful.

Figure 1: Representation of the SRK/GEMS (adapted from Systemico’s human error model)

The model identifies that human failures present either as someone intentionally (deliberate) or unintentionally (inadvertent) doing the wrong thing. The inadvertent errors are considered to be human errors, which fall into one of three categories: skill-based, rule-based, or knowledge-based mistakes. Skill-based mistakes can be further broken down into slips or lapses, both of which occur due to a lack of attention to the task at hand.

Here’s an example of a situation that may be a true human error:

An operator performs a task that requires manually calculating and then mentally rounding a value and recording it in a batch record. He performs the calculation, mentally rounds the result, and has in his head what he needs to write down. A coworker arrives, interrupting him and diverting his attention from what he’s doing. He then writes a truncated rather than a rounded value.

The operator then asks the coworker to verify the step. He hands the documentation to the verifier but continues talking to him, which distracts the verifier and causes him to miss the rounding error. Processing continues, using the incorrect result.

Later, QA identifies the rounding error in the batch record, which alters every calculation from that point forward. In this instance, the impact on the batch was minimal — no limits or specifications were exceeded.

Upon investigation, the supervisor identified no gaps or issues in the process — the calculation is clearly and correctly defined in the procedure. He verified both people had successfully completed training on the rounding procedure and had training on the current version of the process procedure. Additionally, both successfully completed and checked this calculation many times in the past with no errors. The supervisor addressed the error with each individual, and both said they hadn’t been paying full attention to the task and did not realize an error had occurred.

In this example, we have what may be a true human error — a one-time event where trained personnel executing a well-defined process had their attention diverted from the task, resulting in a mistake due to inattention.

If we can justify this as the situation, having identified that no other process/system issues existed, then why not call it a slip or lapse, as appropriate? Again, it can happen. It just shouldn’t happen frequently.

However, even if it is a true human error, we shouldn’t necessarily assume that only the involved personnel are at fault. When rule- or knowledge-based errors occur, we should also look at other processes — training and oversight, in particular — to identify factors that may have contributed to the mistake.

For example, a rule-based mistake occurs: A new operator misapplies a rounding rule and rounds all values instead of just the final value, which generates an out-of- specification (OOS) result. When this error is addressed, we should also question why the person wasn’t appropriately prepared for the task. Did the training not provide them enough practice? Did practice examples reflect the operational situations the person would face? Does the procedure specify that only the final value should be rounded?

With knowledge-based mistakes, cognitive strain errors can occur when a person is multitasking — it may require more focus than they can provide to mentally manage the information required to accomplish multiple tasks at once. In this situation, we should identify why the person was multitasking. Were they assigned to perform too many tasks at once? Is the department under-resourced? Was the supervisor aware that the person is multitasking and is unable to function at the required level?

These are only two examples of how other processes should be considered when investigating human errors. Others may require addressing difficult procedure formats, non-user-friendly equipment, and other issues that may indicate problems in other areas.

Does every deviation/NC require investigation and corrective action — even the low-risk ones?

Addressing the CAPA part of the question first: Would a CAPA be necessary in the rounding error example? Maybe not. A risk assessment may classify it as a low-risk situation because:

- Severity is low: The error had no batch impact, and future, similar rounding errors likely would not have a significant batch impact either.

- Detectability is high: The calculations are checked at least twice — by the verifier and QA — and the checks caught the error that occurred.

- Frequency is low: Both operators had correctly performed the task several times before with no errors.

We could use this to justify requiring no action beyond the supervisor pointing out the error to the involved operators. For individuals who simply had an off day, a discussion with their supervisor may be enough to set things right. If further action is deemed necessary, requiring follow-up checks monitoring the operator and verifier to ensure a similar error isn’t made may be appropriate.

However, from a different perspective this error shows that, while this process is often correctly executed, it has possible failure points: Both the operator and the verifier missed the rounding error. Manual calculations or other manual operations can, and on occasion will, fail. So a CAPA could be identified to protect against the failure occurring again in the future. Automating the performance of the calculations and rounding would reduce the possibility of future errors.

We also don’t know whether other operators/verifiers have made the same mistake. The example only discusses the two operators involved in this specific situation. Looking at a broader data set may identify several similar errors in manual calculations. In this case a CAPA should be considered, because it’s a broader failure than initially identified.

Join Joanna Gallant as she explores the conditions that can contribute to human error in this upcoming online seminar:

Reacting to "Human Error" — Moving Beyond "Retraining" As A Response

September 27, 2016 | 1:00-2:30PM EDT

To loop back to the first half of the question and tie all of this together, I do believe that every deviation/NC requires some level of investigation, root cause analysis (RCA), and corrective action.

Risk analysis shows that some deviations/NCs pose higher risks than others. And those that pose product quality or patient safety risks require thorough investigation, RCA, and CAPAs.

However, minor/low-risk situations still resulted from a process issue/problem, and they should not be ignored or excluded from the process simply because they didn’t pose a significant/high-risk impact to the process this time. Disregarding minor/low-risk situations allows the problem to continue, which will likely create a larger problem the next time it occurs.

Maybe the better way to state it is that for low-risk situations, a less formal approach to investigation, RCA, and CAPA is appropriate.

Consider our “low-risk” rounding error example. It went through a simple investigation, RCA, and CAPA process. When the error was identified, it was assessed to determine batch impact. The supervisor investigated whether the involved operators were trained, how the error occurred, and whether their prior performance was acceptable. This led to identifying human error as the root cause. The supervisor took corrective action by addressing the error with the involved personnel, which hopefully leads to a behavior adjustment. So all three elements were, in fact, done, but in a less formal manner that befitted the situation.

What are some first steps to begin using human performance improvement tools and processes?

Use these tools and processes where you have existing human errors/performance issues to correct, or to help identify ways to continuously improve performance.

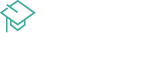

In the case of identified human errors/performance issues, these are the gaps that would be identified in the Gap Analysis step on the ISPI Human Performance Technology (HPT) Model (Figure 2). From there, specifically define how Actual Performance differs from Desired Performance, and investigate to understand the existing situation (Environmental Analysis) and identify possible causes that could be contributing to the errors/issues that are occurring (Cause Analysis).

Figure 2: ISPI Performance Improvement/Human Performance Technology Model

During the investigation, talk to the involved operator(s) about the error/issue, and ask how/why it happened, how the error could be avoided or eliminated, what is needed to enable correct performance, and whether they understand the expectations for the task. Observe the task(s) and identify where distractions or multitasking occur, verify personnel are performing as expected, and verify that the procedures have enough information to guide task performance.

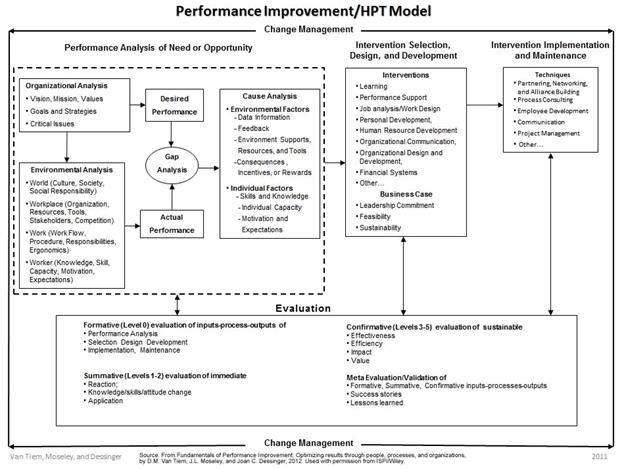

Look for answers to the questions presented in the Behavioral Engineering Model (Figure 3):

- Do personnel know what’s expected of them and what the priorities are, and have they received feedback on their performance against those expectations?

- Do they have the correct resources (equipment and procedures, work environment, time), and have they been designed to enhance performance?

- What incentives do they have to perform the task correctly? Is correct performance rewarded and poor performance addressed?

- Do they have the knowledge and skills to perform the task as expected?

- Are they physically capable of performing the assigned task, and is the task scheduled at optimum times for performance?

- Does the culture support proper performance of the task? Are personnel motives and company incentives aligned?

Figure 3: Gilbert's Behavioral Engineering Model (adapted from Deb Wagner's HPT Toolkit)

Alternatively, to use this in a continuous improvement manner, when no known performance issue exists, start at the Organizational Analysis step in the HPT Model. Identify the specific situation to be improved, and then define Desired Performance and what is needed to support it. Next, in the Environmental Analysis step, identify what currently exists in the organization and how well it’s functioning to define Actual Performance. Then, in the Gap Analysis step, identify any gaps between what’s needed (Desired Performance) and what exists (Actual Performance) that could lead to performance issues.

One starting point for the process can be addressing undesired behaviors. These should be relatively obvious (poor attitude, poor performance, etc.) and they are gaps that should be addressed where they exist. Or, select a small group and try the process in a desired improvement initiative in that group. Either approach should illustrate how the process works and what kind of results can be achieved in a microcosm of the larger company environment.